Ransomware and Recovery

Threat vectors in cybersecurity are always evolving and one of the latest trends is the proliferation of ransomware. No matter the size of your business, or even if it’s just your home or personal system, a rather serious question should always be asked:

“If all my digital assets are lost or destroyed, what impact will that have on me?”

This kind of questioning usually falls under the umbrella of Disaster Recovery (DR), an area that individuals and small businesses rarely take a serious look at. Far too many people shrug off any kind of budgeting or planning for DR because they feel it just can’t happen to them. “My house/office is safe from Fire, Flood, etc. Why should I waste valuable resources on something that is likely to never happen?” It’s certainly easy to sympathize with such thinking. But, that’s where ransomware brings a whole new perspective on the need for a good DR plan. Ransomware doesn’t care how rich or poor you are. It doesn’t care how good the neighborhood is you live in. It doesn’t care if you have just a few megabytes of important files or petabytes of data on your system. It’s completely non-discriminatory. It’s an equal opportunity threat and everyone is equally subject to its malicious payload.

Now that you’ve come to grips with the fact that you are just as likely a victim as a Fortune 500 company, the next step is to come up with a plan of action to deal with it. First and foremost, you should focus on prevention. The old saying is that “an ounce of prevention is worth a pound of cure.”

The most common way a virus gets onto a system is through individuals who open links to malicious websites, download software from questionable sources, or even social engineering. The cliché is that “knowledge is power”, so take time to educate yourself and your staff on what to look for to avoid being a gateway for malicious code into your system.

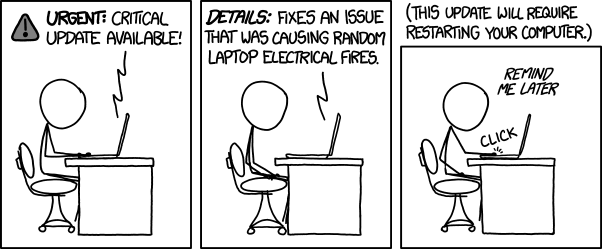

Only slightly less common is the use of system exploits to breach your system. As exploits are discovered, software vendors are usually quick to release updates to mitigate them. This, however, requires that the end user keep their systems up-to-date with the latest software patches. As people are inherently lazy about keeping their systems updated, this provides a window of opportunity to attack the unpatched system.

Source: https://xkcd.com/1328/

Assuming your system has suffered a breach, ransomware is malicious software just like any other virus or malware out there. As such, keep your antivirus/anti-malware software up to date. In larger corporations, it is common to also install Intrusion Detection Systems (IDS) and/or Intrusion Prevention Systems (IPS). However, it should be noted that antivirus (AV) software is a reactionary response. By the time the AV software has detected the ransomware, it may have already managed to deliver its payload. To limit the amount of damage it can do, you need to minimize the attack surface.

Many viruses run at the same privilege as the user who is infected, so you should limit the amount of access that each user has on the system. A user should only have as many rights as are required to do their job. Should they need additional rights for an abnormal task, those additional rights should be limited to the shortest amount of time necessary to complete the task. These concepts fall into Just Enough Administration and Just In Time Administration. As an example, Alice works in marketing. As such, she has read/write access to the marketing file share. As an employee, she also has access to the corporate policies file share, but she only has read access to this resource. When she’s asked to help Bob with a new product the company is developing, she might be given temporary read/write access to the project folder, which will only be granted for the duration that she’s assigned to that project. Minimizing access in this way is generally a good practice as it also helps to protect against insider corporate theft, protection against disgruntled employees, etc. But, for this discussion, it also helps greatly minimize the amount of damage that can be done by a virus.

An important note: It is incredibly bad practice to use a default administrator account to perform all actions and services within a system. Especially regarding backups. Specific services (such as backups) should have isolated accounts and credentials that only grant the service access to what is needed for that task. This will, for instance, prevent a careless user logged in as an admin from being able to infect your backups because the admin should not have access to the backup file systems by default.

Houston, we have a problem!

All the training and prevention is good and well. It will take you a long way for a very little invested cost. However, inevitably, one will get through. Data loss will occur. And, make no mistake about it, it’s not a matter of “if,” it’s a matter of “when.” How you deal with it at this point largely depends on what steps you took prior to the event happening. If you don’t have a DR plan, then you are essentially left with picking between two options:

- Pay the ransom

- Deal with the loss of data

In rare cases, older ransomware used relatively weak encryption, so it is possible to try to crack the encryption to recover your files. However, any modern ransomware attack will be using encryption of sufficient strength to make this kind of effort “computationally infeasible” (meaning it will take far too long to crack even with the aid of supercomputers at your disposal).

If you have taken the time to put a DR plan in place, then “dealing with the loss” usually gets translated into “recover your data from backups.” However, a good backup strategy is more involved than just making a copy of your data. In fact, you should ideally have multiple copies of your data. This falls into the principles of the 3-2-1 Rule. This simple concept has the following tenants:

- 3 copies of your data should be maintained

- 2 different technologies and/or mediums should be used

- 1 copy of your data should be stored off-site

Working in reverse order, it only makes sense that one copy should be maintained off-site. Should a building succumb to fire, flooding, tornado, burglary, etc., then all effort is in vain if a copy is not stored in a geographically separated location. How far away this location needs to be is largely dependent on the threat that you are trying to mitigate. If you are attempting to avoid data loss from hurricane damage for instance, then you should have your off-site location several hundreds of miles away, preferably far from the coastal region. For mitigation of a ransomware attack, on the other hand, the building down the block would be sufficient so long as they don’t share network or transport systems that would allow the virus to easily spread from one site to the other.

Next up, 2 different technologies and/or mediums should be used. Why? Simply to avoid an exploit or fault on one system from being able to simultaneously affect both storage systems. If every system that holds your data is using the exact same hardware and software, then it’s entirely possible that a fault which affects one could affect all your backups. A manufacturing flaw, a bad firmware update, a bad software patch, a common security vulnerability, almost anything really. If it is shared among all your systems, it presents itself as a potential single point of failure which could lead to a complete loss of all your data. Some of this can be mitigated by rolling out updates to systems at different intervals for instance. But, delaying updates for too long potentially leaves you exposed longer to known threats.

Last is that you should have 3 copies of your data. Aside from your source and off-site copies, a good DR plan will also include a 3rd local copy as well. There are a few reasons for this. First is that the local copy provides for a faster recovery time. If you have a loss of data and need to wait 2 days to recover while you download your off-site backups, that’s 2 days’ worth of downtime and lost productivity. Aside from the obvious loss of money through employee hourly salary, there’s also brand reputation to consider. Having a local backup provides a much quicker return to operations. In fact, some DR software will even let you recover directly from your local backups and conduct a restore to the normal production environment in the background. This can cut your restoration time back to an operational environment down to a handful of minutes.

The second reason for a local backup is that they are often much faster to create. Once created, the local backups can then be copied off-site with a slower link or connection. This is particularly important if your backup technology must pause a service while the backup is being created.

As of late, some have extended the 3-2-1 rule to be the 3-2-1-1 rule. Some even go as far as 3-2-1-1-0. The extra 1 at the end that some people add is that at least one copy of your data should be off-line. Be it tapes that are not available for direct/immediate access, hard drives that are rotated, or some other mechanism to prevent immediate access to the backups. With the advent of Virtual Tape Libraries (VTL), having your off-site backup use a VTL satisfies both the 1’s in the extended rule.

Trust, but verify.

Something not covered by the 3-2-1 rule, but is a tenant of a good DR plan, is to test your backups. Make sure that you periodically verify that your backups are doing what you think they are and are free of errors. Ensuring your backups have zero errors is where the extra zero comes from in 3-2-1-1-0. But ensuring zero errors goes beyond just making sure the data isn’t corrupted. The data could be perfectly valid, but still not usable. If you can’t recover from your backups, then there’s no point in investing the time and money in making them. Depending on the functionality of your recovery software, some can create a lab environment where your systems can be restored and tested. Some DR software systems can even go so far as to automate such testing for you and run custom scripts against the environment. Make sure the tools are doing what they are supposed to do and equally as important, is to make sure your staff knows how to properly use those tools to conduct a recovery.

One last worthy note and this should go without saying, but please make sure you have cleared the threat from your systems before conducting a restoration from backups. This includes verifying that your backups do not contain the ransomware only to be reintroduced after the restoration.

To summarize, remember that you are not being singled out or targeted any more than the next person. Ransomware is an equal opportunity software attack. Your first step in the process of avoiding the devastating effects of a ransomware attack is to keep everyone trained on how to avoid letting malicious software into your environment in the first place. Understand that people are inherently fallible and will require automated backup by means of antivirus/anti-malware software. Systems are vulnerable and must be regularly patched and maintained. Your systems should be as isolated as it is practical to reduce the amount of damage that can be done from a breach. Plan for the fact that an incident of some kind is inevitable. A central focus of your plan should always incorporate the 3-2-1 rule. Additionally, you should test your recovery process to make sure you can restore your data when the day finally comes to use it.